Update: See a more complete demo using a malware tool set I’ve created called Blazescan

So I’ve been examining my techniques that I apply to investigating hacked websites, a common task in my day to day work. And this ended up becoming a bit of a project I’m working on. First I would like to go through the common techniques that I apply, not in a specific sense but a process that you can use as a starting point for investigating an intrusion on a web server.

I am working on a VM with a host of infections that I can share for you to practice this technique as well as a write up to show where all of the flags are to check your work. Without further adieu let’s get started.

Part 1 environment:

So first of all when it comes to web servers the vast majority run fairly similar configurations. In this case we will be focusing on Linux/Unix which according to W3techs accounts for 2/3rds of all web server OS’s. As well the most common web content management system by far is WordPress with over 50% of the CMS ecosystem and 28% of all websites.

With this in mind most compromises I deal with are with WordPress sites, likely do to selection bias and poor patch management among these site deployments.

With all that out of the way one of the best things you can do is start with good knowns, having a copy of the files that you know are good. One good tool to do this in general is OSSEC, but thats a topic for another day. For WordPress there is a useful tool call wp-cli which allows for shell driven management of WordPress. One of its best uses from a security perspective is that you can use it to verify the core WordPress files against the official WordPress repository.

[user@host home]$ wp checksum core –path=/home/user/public_html Success: WordPress install verifies against checksums.

user@ubuntu-malware:~/public_html$ wp checksum core Warning: File should not exist: wp-admin/x.php Success: WordPress install verifies against checksums.

Part 2 hunting

So you may have some markers to investigate from the last step, but other searches can be performed both standard style virus/malware scanners and some manual searches for markers as well.

Update(5/2018): Try out a new scanner I built

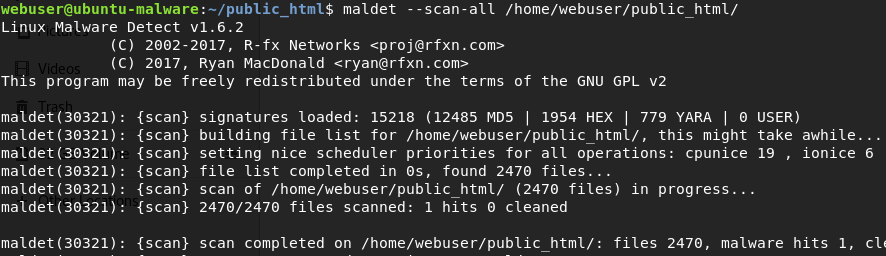

Linux Malware Detect (maldet) is a common scanner for Linux web servers:

webuser@ubuntu-malware:~/public_html$ maldet --scan-all /home/webuser/public_html/

Linux Malware Detect v1.6.2

(C) 2002-2017, R-fx Networks <proj@rfxn.com>

(C) 2017, Ryan MacDonald <ryan@rfxn.com>

This program may be freely redistributed under the terms of the GNU GPL v2

maldet(30321): {scan} signatures loaded: 15218 (12485 MD5 | 1954 HEX | 779 YARA | 0 USER)

maldet(30321): {scan} building file list for /home/webuser/public_html/, this might take awhile...

maldet(30321): {scan} setting nice scheduler priorities for all operations: cpunice 19 , ionice 6

maldet(30321): {scan} file list completed in 0s, found 2470 files...

maldet(30321): {scan} scan of /home/webuser/public_html/ (2470 files) in progress...

maldet(30321): {scan} 2470/2470 files scanned: 1 hits 0 cleaned

For manual searches looking in files we want to look for markers of commonly used code in backdoors and obfuscation.

for x in $(find / -type f -name ‘*.php’); do fgrep -HF ($Malware Terms) $x; done 2> /dev/null

Malware Terms: base64_decode, sh_decrypt_phase, eval, rot13, gzinflate

***the for loop above sometimes acts weird with the ‘*.php’ some linux versions only works with “*.php”

Part 3 track the path and timing

As you are locating possible malicious files you will want to make sure you are getting accurate timestamps so that you can correlate the information across logs and use it to find other files created or modified during the same time interval.

the stat command is a great way to grab the file timestamps.

webuser@ubuntu-malware:~/public_html$ stat wp-login.php File: 'wp-login.php' Size: 34327 Blocks: 72 IO Block: 4096 regular file Device: fc00h/64512d Inode: 277715 Links: 1 Access: (0644/-rw-r--r--) Uid: ( 1001/ webuser) Gid: ( 1001/ webuser) Access: 2017-09-24 09:31:21.549742638 -0400 Modify: 2017-05-12 17:12:46.000000000 -0400 Change: 2017-09-01 12:19:36.983238279 -0400 Birth: -

The Change time is the one that we want to pay attention to as this stamp is going to be more accurate and less likely to have been tampered with. With this you can look through the site domlogs to correlate and find the potential point of compromise and actor TTP (tactics, techniques, procedures) and other indicators of compromise (IP’s, user agents, etc).

10.X.X.83 - - [01/Sep/2017:12:19:34 -0400] "POST /wp-content/themes/twentysixteen/wxosa.php?path=&c0de_inject HTTP/1.1" 200 3729 "http://malware.lan/wp-content/themes/twentysixteenwxosa.php?path=&c0de_inject" "Mozilla/5.0 (X11; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0"

When grepping the domlogs be sure to look for POST as many sites have a much smaller amount of this traffic and can help you locate the actors.

As well you can then use the timing to look for any other files that have a change time in the same period.

find /path/to/investigate -ctime (# of days you want to look back for modified files)

So this wraps up the first of these posts. Be on the lookout for the VM to test these methods and your skills to execute one finding the malware and backdoors on a website.