Or how your sever gets used in a reflective DDOS, an anecdote…

So many platitudes in the infosec community go :

As a defender you need to be right 100% of the time, the attack only needs to get it right once.

or

Once the attacker gets in, they need to be right 100% of the time or the blue team will find them.

Well you can find some truths in these but, there’s another truth.

That no matter how hard you try, you will always get it wrong at least once.

So after years of using my hosting provider, or registrar to manage DNS the last infrastructure move I made earlier this year I decided to run my own DNS server to give more flexibility and configuration options that are often missing from the 3rd party options.

I decided to role my own BIND server, and studied up on the configuration and settings, created the DNS zones and when the transition time came it went quite smoothly. After testing and deployment, called it a day and moved on to the next project.

A few months later was checking on some statistics on my servers, and noticed some larger activity on the network activity from the DNS server, but it hosts more than just DNS so I chalked it up to the other services.

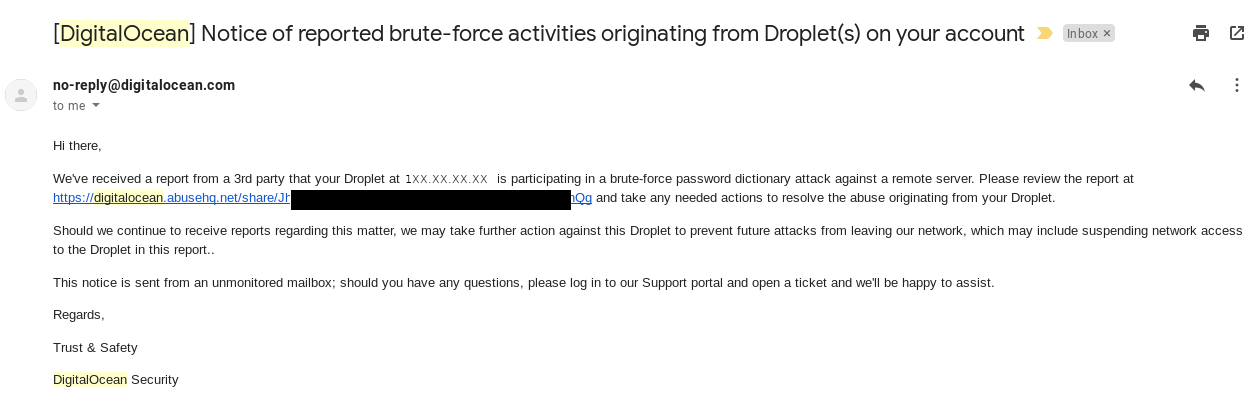

But about a week later I got an email from my hosting provider.

Well, I was quite surprised. Thinking over my setup, I was quite confused thinking over my setup that it was quite locked down, and nothing came to mind especially anything that would allow a brute force. I looked over the linked report, which pointed the finger not a brute force but DDOS reports. Again, nothing came to mind. So I logged into the server and looked for logs to tell me more what was going on. As I expected the server still seemed in good order and locked down, no malicious SSH access or webapps that appeared compromised.

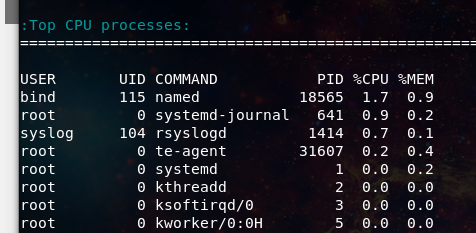

Looking over the load logs, I missed this hint the first time I looked at it…

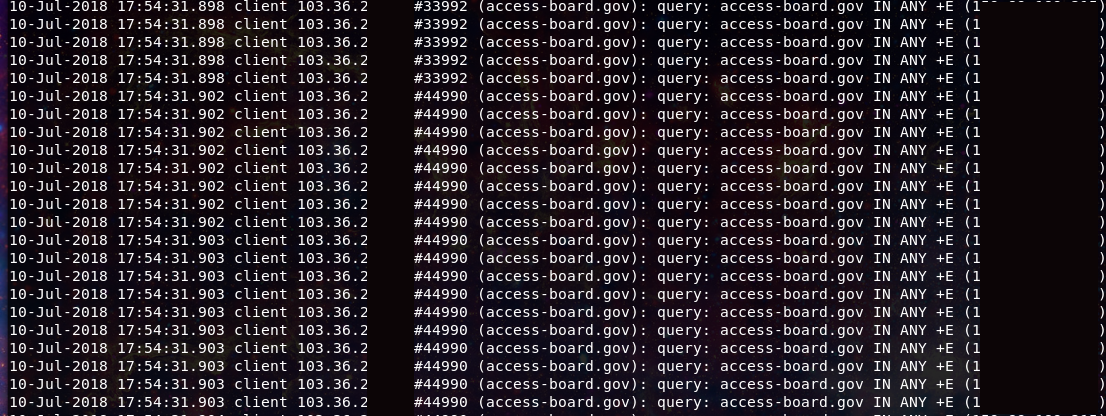

After rereading the abuse complaint a few more times, and looking back over the network traffic reports, I realized that the web traffic could not account for the spike in network activity, that was apparent. I looked at what else may be in play and found an overwhelming amount of DNS queries coming into my server.

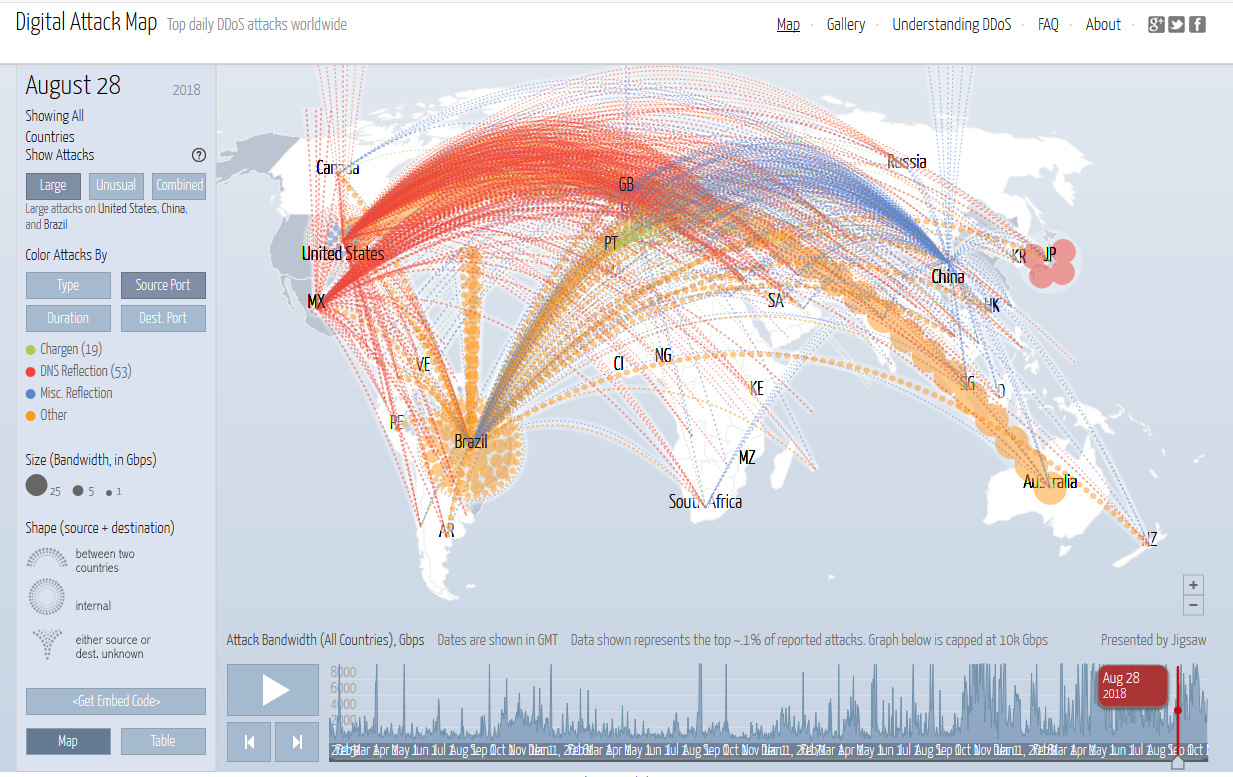

The queries were coming in at incredible rates. My server due to a miss-configuration had been recruited into participating in a reflective DDOS attack. ( read up on this type of attack at the following Cloudflare post)

Over a six day period that I looked at over 8 million DNS packets had been redirected from my server to the intended targets. Knowing this I began to brush up on my knowledge of BIND configuration. I thought, rate limiting would prevent the flood so I enabled:

rate-limit {

responses-per-second 2;

};

Did it stop? No not quite, only briefly. Next tried to target the ones reporting in the logs:

blackhole { 1.1.1.1;2.2.2.2...etc; };

Did it work? Only until they picked a new target IP. Then I found the key thing that I had left out and allowed for the abuse.

allow-recursion {none;};

with recursion allowed any domain could be queried and my DNS server would try to get the answer and respond.

Finally with all of these changes made the onslaught ended and my server traffic returned to normal and was no longer being co-opted into a attack across the web.

Moral of the story kids, you won’t be right every time. Build systems to allow for failure, whether that be technological, process, or people specific. Don’t get beat up by your failures, learn from them. And of course don’t run an unprotected open DNS resolver.

Have questions let me know @laskow26 on twitter.